Protect Your Privacy: Avoid Sharing Medical Images with AI Chatbots

Quick Summary

- AI chatbots aren’t HIPAA-compliant: They’re not designed to securely handle sensitive medical data.

- Risk of privacy breaches: Uploading medical images could expose your personal health information to misuse.

- Safer alternatives are available: Use HIPAA-compliant telemedicine platforms for secure consultations.

- Stay cautious online: Protect your medical privacy by avoiding unverified tools and platforms.

- Legal and ethical gaps exist: Current U.S. laws don’t adequately regulate general AI tools for medical data.

Imagine innocently seeking advice by uploading medical images to AI chatbots like ChatGPT or Bard, unaware of the hidden AI chatbot privacy risks. With the rise of AI in healthcare, these tools offer convenience but often lack transparency about AI and HIPAA compliance. Many users risk exposing sensitive data, assuming these platforms are secure. This article dives deep into understanding the potential dangers, offering insights into secure AI medical tools and AI data privacy tips. By learning how to navigate these technologies responsibly, you can protect your privacy and embrace safe telemedicine platforms without compromising sensitive information. Stay informed, stay secure.

Your health data is precious—don’t let it fall into the wrong hands. Subscribe now to stay informed and learn how to protect your medical privacy from AI chatbots!

What Are AI Chatbots and How Do They Work?

AI chatbots are sophisticated resources engaged with big data to imitate the conversation of a human being. These are kinds of fluent Natural Language Processing (NLP) to identify and interpret the queries of users and therefore useful in various applications such as customer service and content development. Some of the available ones include ChatGPT, Bing AI, and Google Bard among others but these are general-purpose models.

However, the mentioned chatbots are not the same as the existing or developing AI applications related solely to healthcare. For one, chatbots do not have regulatory standards compared to the FDA-regulated diagnostic software, or otherwise, HIPAA compliance. This makes it rather dangerous to upload medical images to AI chatbots given that they may not provide data security.

If it comes to secure AI medical tools or safe telemedicine platforms, prioritize the tools that provide healthcare. It is important to adhere to the AI data privacy tips to minimize the privacy risks associated with AI chatbots in sensitive contexts.

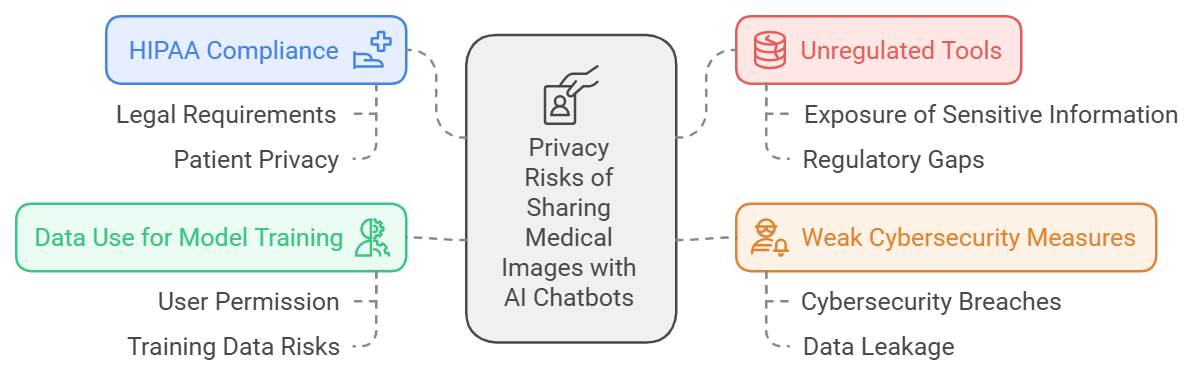

The Risks of Sharing Medical Images with AI Chatbots

When people transfer medical images to the AI chatbots, the privacy concerns are valid, including HIPAA compliance. Most such tools remain unregulated, exposing patient’s sensitive health information. These AI chatbot privacy risks are even amplified by the use of the data for model training, usually done without users’ permission.

Recourse to weak measures of cybersecurity also contributes to the high chance of break-ins with personal medical records’ exposure. For instance, a leakage of thousands of sensitive images of a 2023 AI tool revealed that safe AI medical tools are required.

In order to avoid risk, safe telemedicine platforms with good compliance should be used, and following the guidelines of AI data privacy protects your data. Watch it— your privacy is on the line!

Legal and Regulatory Gaps in AI and Medical Data Privacy

The increased adoption of AI in healthcare presents AI chatbot privacy concerns regarding users uploading medical images to the AI chatbots. HIPAA is principally designed to safeguard data dealt by healthcare professionals but, frequently, goes without AI chatbots; there are gaps in the regulation of AI and HIPAA.

Furthermore, there is no particular law regulating data processed with the help of artificial intelligence, thus, there is obscurity as to who is to blame. While there is an increased likelihood of such AI tools adhering to higher levels of prescribed protocols, the general-purpose platforms have no such similar guarantees. To minimize these risks, it is now important to engage secure AI medical tools, abide by AI data privacy tips, and select secure telemedicine platforms in order to protect private health data.

Ethical Implications of Using AI for Medical Advice

Uploading medical images to AI chatbots raises concerns about AI chatbot privacy risks and compliance with AI and HIPAA standards. AI tools lack the ability to ensure informed consent, potentially exposing users to unethical data usage. Biases in training datasets can lead to inaccurate conclusions, putting patient safety at risk. Moreover, misuse of sensitive medical data for profit or non-medical research highlights the need for secure AI medical tools. To minimize risks, users should follow AI data privacy tips and choose safe telemedicine platforms. Critical scrutiny is essential to foster trust and innovation in AI-driven healthcare.

What Should You Do Instead?

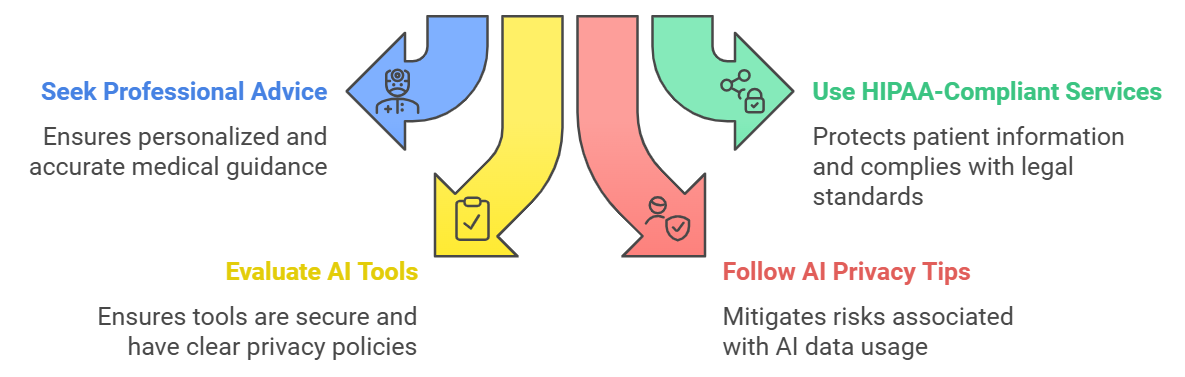

It is still advised that people seek medical advice from qualified professionals approved by the relevant health authorities instead of waiting for the outcome from an AI chatbot. Professionals give the correct advice the particular client requires.

The resolution of sharing health information requires using secure, HIPAA-compliant telemedicine services. These keep your medical images and information safe.

Evaluate AI tools carefully. Make sure to find out whether the service complies with HIPAA regulations, the product has an easily determinable privacy policy, and the data is encrypted well. Safe AI medical tools have full disclosure and are secure for the user.

To stay safe from other AI chatbot privacy risks, follow the best smart tips for AI data privacy. It has been mentioned that taking the help of reliable websites and applications that have a record of protecting the information is considered safe.

Ensuring the security of applied tools and receiving recommendations from AI specialists, you can reap the evaluated advantages in the sphere of healthcare.

How to Safeguard Your Medical Privacy Online

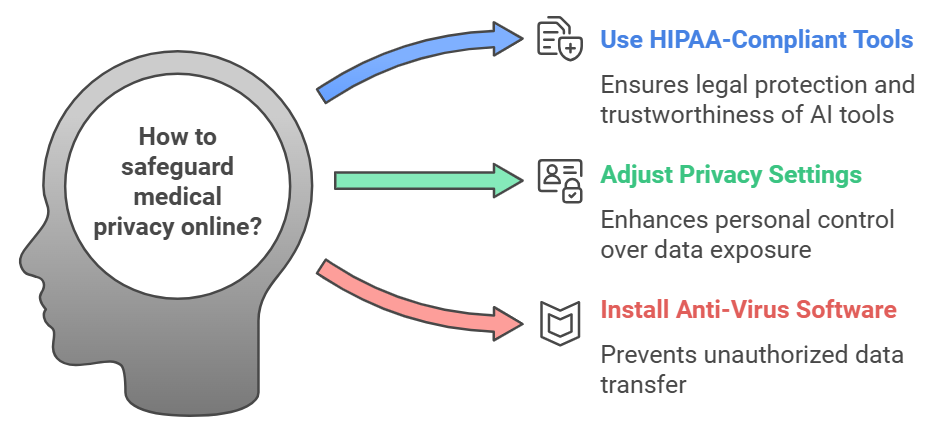

Your medical information has to be safeguarded in the current world where technology cannot be trusted with personal information. Plans should not be submitted to unknown AI chatbots, and no medical images should be uploaded to a similar chatbot due to privacy concerns. Make sure that the AI tool is HIPAA compliant as and when you are using it.

Make sure your privacy settings in your gadgets are set high and use encryption to protect your information. They blend with other similar services and can be in the form of fake telemedicine services or even ‘secure AI medical tools’. Trust only reputable sources.

To enhance protection, get anti-virus software installed, in order to prevent unwanted data transfer. Those are the general steps that if you adhere to the above discussed AI data privacy tips, you will be safe while using telemedicine platforms and your personal health information will be safe. Keep privacy first to manage dynamic threat levels.

In summary, uploading medical images to AI chatbots poses serious privacy risks, often lacking HIPAA compliance. Instead, prioritize secure AI medical tools and safe telemedicine platforms to protect sensitive data.

Empower yourself with AI data privacy tips and make informed choices to safeguard your medical information. Spread awareness by sharing these insights, helping others avoid AI chatbot privacy risks. Together, we can promote safer, smarter use of digital health tools. Stay informed, stay secure!

FAQs:

1. Can I trust AI chatbots with medical data?

No, most of the time AI chatbots are not programmed to protect sensitive medical information as appropriately should. Lack of HIPAA privacy compliance may make them a safety risk to your personal information.

2. Are AI chatbots regulated by HIPAA?

However, HIPAA does not apply to general AI chatbots. For your medical data, only tools that have health care certification that can legally guard your data is legal are allowed.

3. What happens if my medical images are leaked?

Medical image leaks can result in false identification, insurance fraud, or unauthorized use by third parties with potential lifelong negative privacy and financial implications.

4. Which platforms are safe for medical consultations?

Teladoc Health or Zocdoc are examples of HIPAA compliance for ordering and sharing medical details through telemedicine.